What would Siri say? – A panel discussion with digital assistants

In their conversation, the panelist discuss the problem of having a female sounding voice by default, what could be done to improve their situation, and how important it is to spotlight women in the tech industry.

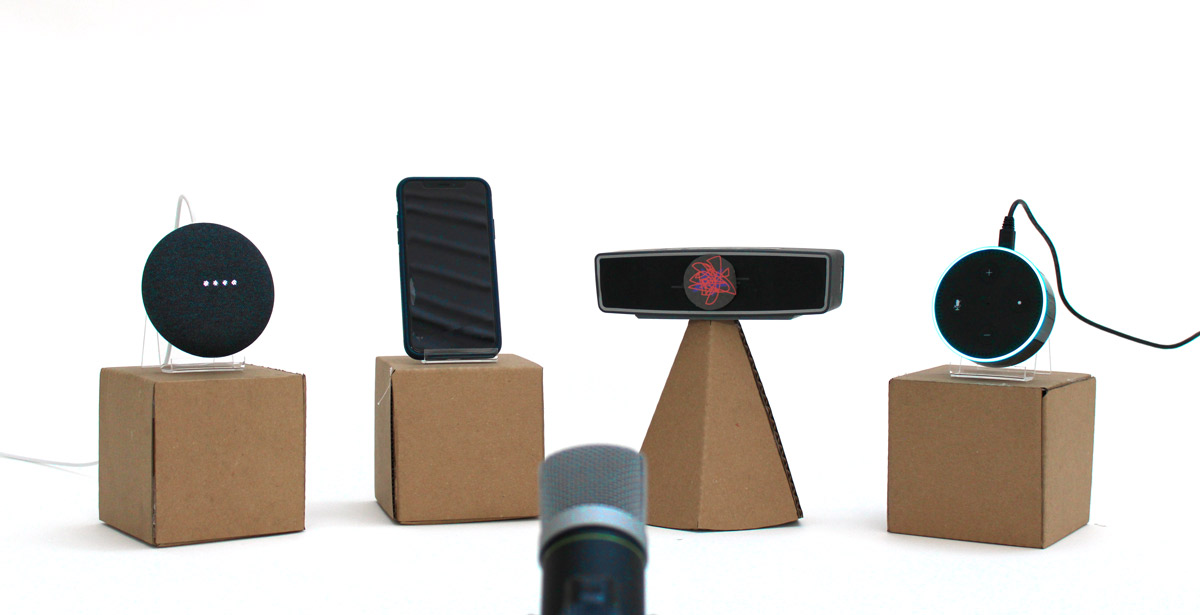

For this project, I built my own voice-command assistant, in order to let the virtual assistants Siri, Alexa and Google communicate amongst each other. I named this mediator “Rebel”, because it should ask all the uncomfortable questions.

To program the Rebel, I made use of the speech recognition and speech synthesis provided by a Web Speech API (https://developer.mozilla.org/en-US/docs/Web/API/Web_Speech_API). My Rebel is able to understand and recognize the sentences the other devices say and based on certain trigger words I added to the code, it will read the corresponding text that I linked to the trigger.

In order to keep the conversation between the devices going, I choreographed a dialog that they could follow. The dialog is technically based on implemented text to speech content read from the Rebel and Alexa, as well as internet content looked up by Siri, Google, and Alexa. The Rebel runs in a Chrome browser on my laptop, but in order to have it also visually similar to the rest of the panellists, I used a small mobile loudspeaker to represent the rebel.